Last night at 4:45 AM I presented my capstone project and completed my first Maven Bootcamp, thanks to Learnest.

What key concepts did I learn?

As I have already talked about the first part of the bootcamp, I’ll focus on some of the most valuable concepts and principles I’m taking with me:

- We are inevitably facing a new divide

- Equitable access to AI matters and frameworks

- The AI practitioner learning curve: From prompting to fine-tuning

1. The AI divide

The deeper I explore this tool, the more similarities I find with all the other waves we’ve gone through, particularly with the internet wave.

As described by Brookings, we are inevitably approaching a new iteration of the classic “digital divide”, that allows some people access exponential benefits in productivity, opportunities and progress, while the majority struggles to keep the pace and struggles to stay competitive. In a nutshell:

The first digital divide: The rich have technology, while the poor do not.

The second digital divide: The rich have technology and the skills to use it effectively, while the poor have technology but lack skills to use it effectively.

The third digital divide?: The rich have access to both technology and people to help them use it, while the poor have access to technology only.

In the Studio language, I’d say that the third digital divide is between those who have a learning AI community, and those who don’t.

2. Equitable Access to AI

Although all the economic and societal forces seem to be doomed to push us towards an -even more- polarised world with just a few benefiting from this powerful tool, I choose to keep a tiny light of cautious optimism for the human potential it brings. Here’s why I remain cautiously optimistic:

What does equitable access mean in an educational context?

Equal access to the BENEFITS of a product, service, resource, solutions that improves educational outcomes.

If we don’t do anything different, AI won’t level the playing field. As we are starting to see with the AI products and subscriptions, only those who can afford them will have access to the latest version to it. This will accentuate the differences between rich and poor. The same old story.

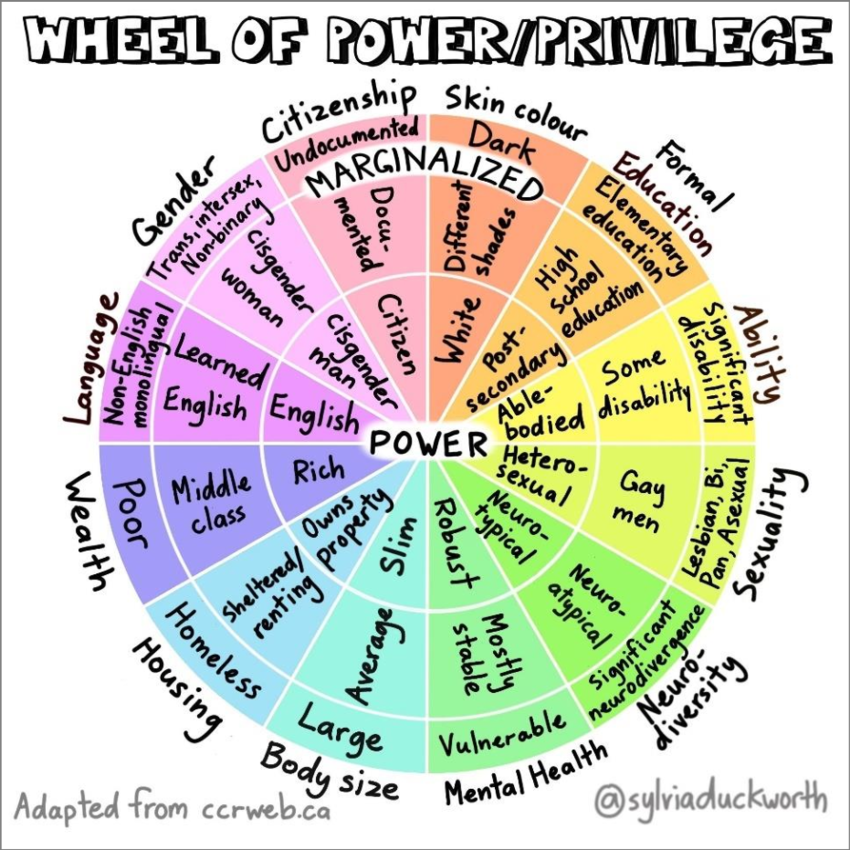

However, I’m happy to learn about frameworks such as Intersectionality (Kimberlé Crenshaw, 1989), whose core idea is that people are not defined by a single identity factor—like race, gender, class, sexuality, disability, or age—but rather by the combination of all these factors. These intersections can amplify privilege or disadvantage in complex ways.

How might different students experience the same AI tool differently based on their intersecting identities?

Also, it was very useful to learn about the Wheel of Power (Sylvia Duckworth, 2022) and the Matrix of Domination (Patricia Hill Collins, 1990), that explain how systems of oppression (racism, sexism, classism, ableism) operate at personal, cultural, and institutional levels simultaneously.

In AI edtech, this helps us understand how technologies can reinforce oppression at multiple levels (e.g., individual bias in AI recommendations → institutional power in determining who controls educational data and use).

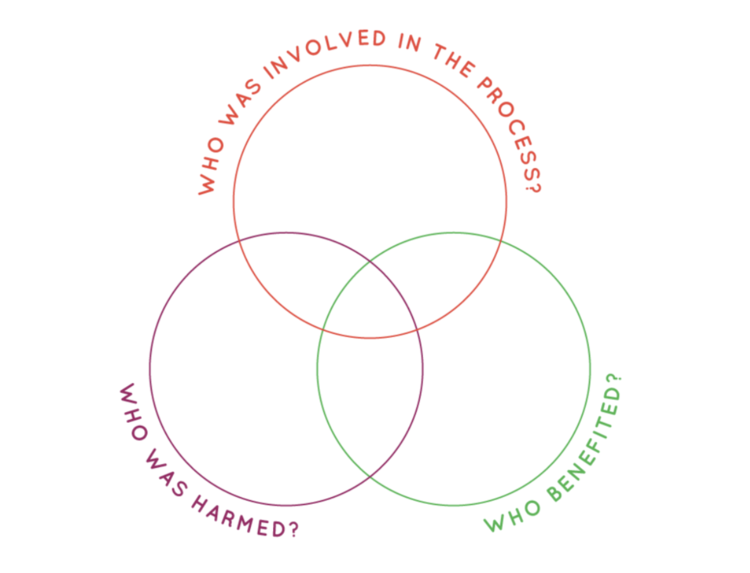

The final concept of this module was about Design for Justice (Sasha Costanza-Chock, 2020), which provides actionable principles for equitable technology development by centering marginalized communities, prioritizing impact over intentions, and honoring existing knowledge systems.

Design for Justice is a Framework and a Practice that:

- Analyzes how design distributes benefits and burdens between various groups of people

- Focuses explicitly on the ways that design reproduces and/or challenges the matrix of domination

- Ensures meaningful participation in design decisions and recognizes community-based, practices

3. The AI Practitioner learning curve

This was probably what I was looking for when I decided to take this bootcamp. What are the different “layers” that we need to learn when we aim to master AI? Here’s what I found:

1. Prompting: The first step is to learn how to prompt a Large Language Model (Such as the ones of ChatGPT, Gemini or Perplexity) properly. Developing this skill is crucial, and we’ll probably equate it at some point to learning “AI language” as if it was English or Portuguese.

2. RAG (Retrieval Augmented Generation): This second step is about enhancing the Large Language Model with additional specific knowledge that will provide more context and personalisation to the LLM.

3. LLM Workflows: Once we know how to prompt and augment a Large Language Model, the next step in the learning curve is to concatenate Augmented LLMs to provide an accurate adaptive solution to a problem.

4. Fine-Tuning: The last layer we discussed at the bootcamp was about Fine-tuning, which means “rewiring” the LLM using more datapoints that were not available to the LLM before, or synthetic data that we’ve generated.

Each of those stages require a considerable amount of time and effort to achieve mastery, which brings me to my final point.

Tools change, principles remain

During my conversations with other Edtech practitioners, we agreed that the most difficult part is to remain principled and not to look for quick answers, fixes or solutions without critically evaluating the output of the tool.

In order to build sturdy and durable houses, you must learn certain principles. Mathematics, Physics, materials, etc. Learning principles is not easy, and it is not fast. It requires dedication and practice. Someone who has mastered the house-building principles will be able to build a small countryside house and a fancy tower in Manhattan. The tools change, the principles remain.

And this is my last reflection of this course: We can’t just learn to use the tool, because the tool will own you and you’ll depend on it. However, if you learn the principles, this tool will elevate you to a level you’ve never experienced.

The AI Learning Group at Minds Studio

As I said in my previous post, Minds Studio is not going to become an AI-first company -the same way it’s not “internet-first” either.

However, I’ve started a new Practice where those of us interested in master AI tools come together regularly to help each other integrate it with our own professional activities.

My Capstone Project

In order to make my capstone project relevant to the problems I’ve witnessed while running Communities of Practice, I decided to spend some time building a tool that helps students log their “aha! moments” and “paradigm shifts” in order to visualize their transformation over time. I’ve called “Significant Change Tracker”, as it uses a methodology called Significant Change on top of an LLM to make it conversational.

You can take a look and play with it here.